Introduction

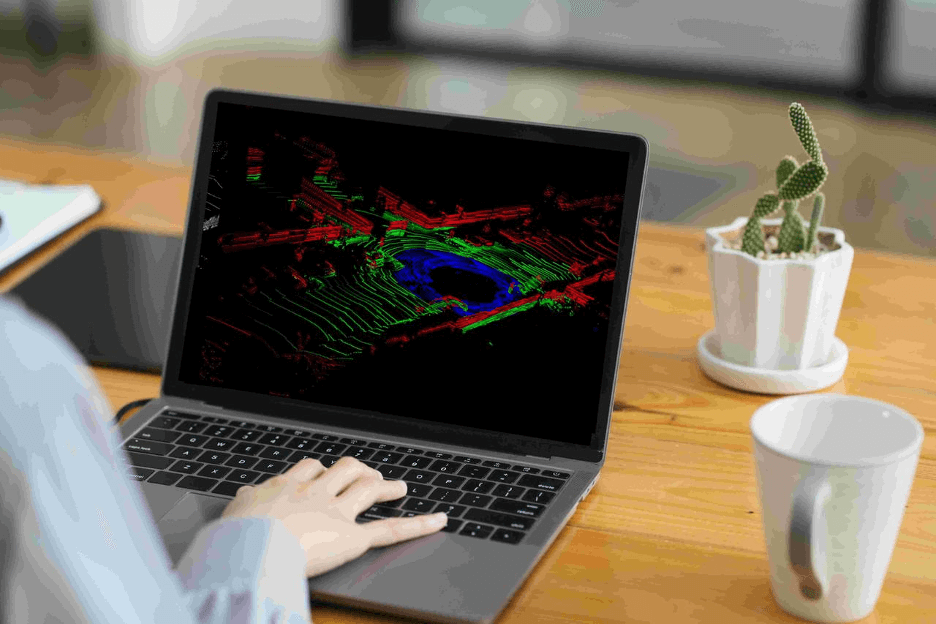

Point cloud data is playing an increasingly crucial role in the fields of computer vision and machine learning. This precise 3D representation provides AI models with rich spatial information, finding applications across diverse domains including autonomous driving, smart manufacturing, urban planning, and cultural heritage preservation. Both dynamic and static point clouds play vital roles in these varied applications. Dynamic point clouds are essential for real-time applications such as autonomous driving, while static point clouds are pivotal in areas like urban planning and infrastructure inspection.

However, as use cases diversify, selecting the appropriate point cloud processing tool has become a key challenge. Efficient tools not only enhance data processing capabilities but also significantly improve algorithm performance and project outcomes. For dynamic point clouds, tools optimized for real-time processing and multi-sensor fusion are often preferred. On the other hand, for large-scale static point clouds, specialized tools like Pointly offer high-performance APIs and support both standard and custom classification models, giving them a unique advantage in processing extensive datasets of entire cities, highways, or railways.

This article aims to guide you through the process of choosing the most suitable point cloud solution from the myriad options available, with a primary focus on tools for dynamic point cloud processing. We will conduct an in-depth comparison of leading tools, analyze key factors to consider when making your selection, and explore future trends in the field. Whether you’re handling large-scale point cloud datasets or developing cutting-edge vision algorithms, this guide will provide valuable insights to help you navigate the rapidly evolving landscape of point cloud technology with confidence.

What is Point Cloud Annotation?

Point cloud annotation is the process of assigning semantic labels to points or groups of points within three-dimensional point cloud data. This step transforms raw 3D data into valuable training material for machine learning algorithms, with significant applications across autonomous driving, robotics, and numerous other fields. Annotation tasks may include labeling objects in the point cloud (such as “vehicle,” “pedestrian,” “building”), drawing 3D bounding boxes, or performing instance segmentation. Specialized software tools are typically used for this process, allowing annotators to visualize the point cloud from multiple angles, select points or regions, and apply appropriate labels. Accurate 3D point cloud annotation is crucial for training AI models to understand complex 3D environments.

Exploring Notable Point Cloud Annotation Tools

The world of 3D point cloud annotation is rich with powerful tools, each bringing its own strengths to the table. From processing massive datasets to offering intuitive user interfaces, these market-leading platforms are shaping the future of 3D data annotation. Here’s a closer look at some of the standout options:

BasicAI

BasicAI Cloud is a comprehensive AI-assisted data annotation platform designed for a wide range of AI and machine learning projects, with exceptional prowess in 3D point cloud annotation. The platform excels in processing large-scale datasets, handling over 150 million points across 300 frames, making it ideal for high-resolution LiDAR data. While BasicAI Cloud shines in autonomous driving applications, its versatility extends to 3D point cloud annotation needs across multiple industries. By offering automatic annotation speed up to 82 times faster than manual methods and accuracy rates exceeding 98%, the platform significantly enhances the efficiency and quality of complex dataset processing. BasicAI’s operation and navigation features surpass those of similar tools, with an intuitive interface that allows for quick adoption and ease of use.

Key Features

- Advanced object tracking across frame sequences

- Customizable confidence levels and class selection

- Multi-sensor fusion of 2D and 3D data

- Collaborative workflow with role-based permissions

- Multi-angle quality assurance system

iMerit

iMerit’s Ango Hub platform offers advanced 3D point cloud multi-sensor fusion solutions, delivering high-precision environmental perception for autonomous driving and robotics technologies. Ango Hub integrates data from LiDAR, radar, and cameras to create rich, accurate 3D scene representations. The platform supports various 3D point cloud formats and provides object detection, tracking, semantic segmentation, and instance segmentation capabilities. iMerit’s expert team leverages Ango Hub’s cutting-edge tools and techniques to ensure high-quality, efficient data annotation, providing a reliable foundation for AI model training.

Key Features

- Proprietary multi-sensor fusion algorithm

- Intelligent 3D-to-2D projection alignment

- Efficient multi-frame annotation system

- Customizable annotation workflows

- Dual AI-assisted and human quality review

- Advanced data security measures

Supervisely

Supervisely’s 3D LiDAR Sensor Fusion platform is a powerful data annotation and management tool suitable for a wide range of computer vision projects. The platform seamlessly integrates 2D images and 3D point cloud data, providing comprehensive environmental perception capabilities. Supervisely supports various data formats, including standard datasets like KITTI and nuScenes, and offers flexible data import and export options. The platform features 3D object detection, tracking, and segmentation functionalities, supports team collaboration and project management, and provides API access for automated workflows.

Key Features

- Intelligent 3D annotation tools (e.g., 3D cuboids, polygons)

- Automatic ground point removal

- Cross-frame annotation propagation

- Customizable labeling templates and attribute systems

- Built-in data version control

CVAT

CVAT (Computer Vision Annotation Tool) is an open-source platform that offers robust functionality for 3D point cloud data processing. The platform supports 3D bounding box annotation, enabling users to work with point cloud data from LiDAR and other sensors. CVAT features synchronized visualization of 2D images alongside 3D scenes, allowing for comprehensive understanding and annotation of complex 3D environments. The platform accommodates various data formats and provides interpolation tracking capabilities, facilitating the handling of dynamic objects in 3D scenes. With options for cloud-hosted services or self-hosted deployment, CVAT offers flexible solutions to meet diverse project requirements.

Key Features

- Open-source software with community-driven development

- 3D point cloud bounding box annotation

- Synchronized 2D image and 3D scene visualization

- Support for multiple 3D data formats

- Flexible cloud-hosted or self-hosted deployment options

Kognic

Kognic offers robust point cloud annotation capabilities, empowering product teams to develop and manage 3D data processing workflows. The platform’s standout feature is its data curation overview, allowing users to select or group point cloud data prior to annotation. Kognic enhances the efficiency and quality of point cloud annotation through various pre-labeling methods and human-in-the-loop workflows. The platform supports synchronized viewing of 2D and 3D data, providing annotators with better context for point cloud data. While multi-sensor capabilities are limited, the platform does offer synchronized image viewing within the point cloud interface. Additionally, the quality assurance (QA) section includes an insights feature, presenting various metrics on point cloud data annotation to facilitate continuous improvement in labeling quality.

Key Features

- Data curation overview

- Human-in-the-loop point cloud annotation workflow

- Synchronized 2D and 3D data viewing

- Multi-sensor data processing (limited functionality)

- Quality assurance (QA) insights for point cloud annotation

- Customizable point cloud data processing pipeline support

Factors to Consider When Selecting a Point Cloud Tool

When choosing a point cloud annotation tool, several factors can significantly impact your project’s success. While many aspects deserve attention, six key considerations stand out:

High-Precision Annotation Capabilities: The cornerstone of any annotation tool is its ability to deliver accurate results. Whether it’s complex 3D bounding boxes, intricate polygons, or detailed semantic segmentation, the precision of annotations directly affects data quality and subsequent model training effectiveness.

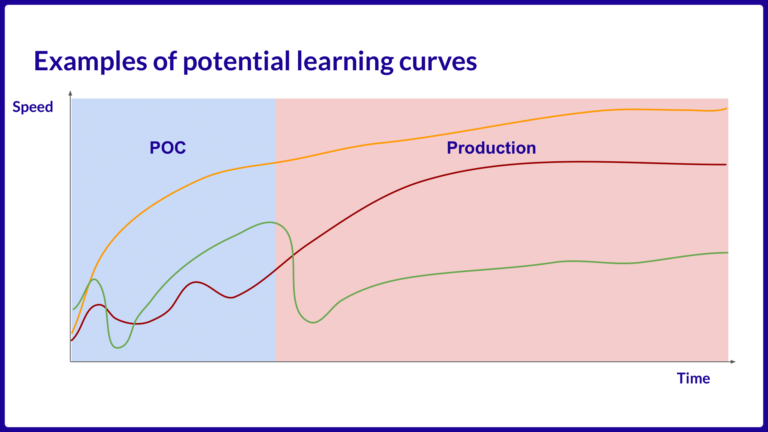

AI-Assistance Features: Tools equipped with AI-driven features like automatic object detection and cross-frame propagation can dramatically boost efficiency. These intelligent assistants not only speed up the annotation process but also help maintain consistency across large datasets.Multi-Sensor Data Fusion: In complex 3D environments, the ability to process and display data from various sensors simultaneously is crucial. This integrated view enhances scene understanding, improving both the accuracy and speed of annotations, especially in applications like autonomous driving or robotics.

High-Performance Large-Scale Data Processing: As datasets grow, the tool’s capacity to handle massive point clouds becomes increasingly important. High performance ensures smooth operation even with millions of points, maintaining productivity in real-world, large-scale projects.

Collaboration and Project Management Capabilities: For team-based projects, features supporting multi-user collaboration, task allocation, and progress tracking are essential. These capabilities ensure efficient teamwork and streamlined project management, particularly crucial for large or distributed teams.

API and Integration Capabilities: A tool with robust APIs and integration options can seamlessly fit into existing workflows and connect with other systems. This flexibility is key for building efficient data processing pipelines and adapting to evolving project needs.

Future Trends

As we navigate the current landscape of 3D point cloud annotation, it’s crucial to look ahead. Let’s explore some key trends that are likely to shape the future of this rapidly evolving field:

Multi-resolution Point Cloud Processing: Future annotation tools will intelligently handle point cloud data at various resolutions. They will be able to quickly perform rough annotations at low resolutions, and then refine key areas at higher resolutions, striking a balance between efficiency and accuracy.

Efficient Annotation of Large-scale Sparse Point Clouds: As LiDAR technology evolves, point cloud data is becoming increasingly large and sparse. Future annotation systems will be specifically optimized to efficiently process these large-scale sparse datasets, employing advanced sampling and interpolation techniques to improve annotation efficiency and quality.

Human-AI Collaborative Ecosystem: Future systems will go beyond simple AI assistance, creating dynamic ecosystems where human annotators and AI agents can collaboratively learn and improve each other. This deep collaboration will lead to continuous evolution in annotation quality and efficiency, capitalizing on the complementary strengths of human and artificial intelligence.

Real-time Annotation of Dynamic Point Clouds: Future 3D point cloud annotation tools will develop the capability to process and label dynamic, continuously updating point cloud streams. This advancement is primarily driven by the specific demands of 3D data processing, rather than solely relying on the development of general AI models. This capability will enable near real-time 3D scene understanding for applications, meeting the unique requirements for analyzing dynamic environments.

Conclusion

Point cloud annotation is akin to a voyage of discovery across a digital ocean. Each data point is an island in the vast sea, waiting to be discovered and mapped. Our annotation tools are our vessels, and choosing the right tool is like selecting a sturdy ship capable of weathering any storm.

Today, we’re charting the archipelagos of autonomous driving and the continents of robotics, tracing the initial routes of technological progress. Looking ahead, our journey will extend to the fertile plains of smart agriculture, the bustling ports of efficient logistics, and the glittering island chains of smart cities.

In this digital age of exploration, human adventurers and AI navigation systems work side by side, jointly creating a grand nautical chart that spans multiple domains.