Honeypot is a process to ensure a higher quality of the annotated batches. In the context of quality metrics, honeypot (or ground-truth) consists in one of the four types of workflows to evaluate the correctness of a labeled data.

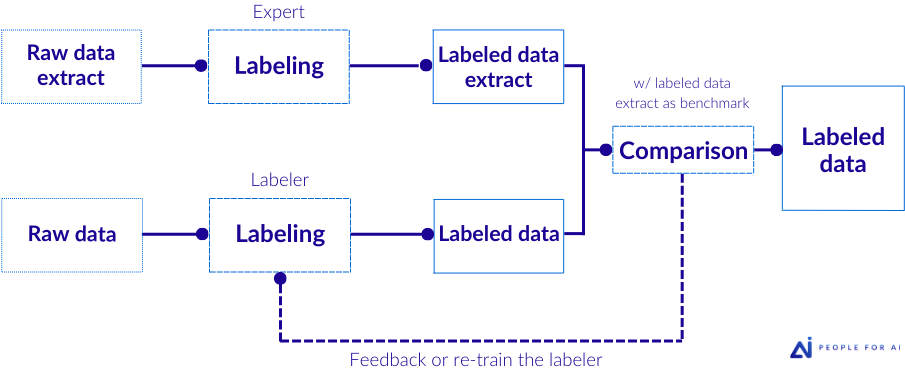

At the project’s outset, an expert (often the client) accurately labels an extract of the data. We then use this extract, known as “the honeypot,” as a benchmark to assess the quality of subsequent labels provided by annotators. In this workflow, each piece of data receives annotations from a single annotator, and we add an expert labeler at the project’s outset.

The four types of workflow to evaluate the correctness of a labeled data and, therefore, measure the quality of the annotation are: without validation, with review, consensus voting and honeypot. Interested in better understanding how to evaluate quality in a data labeling project? Take a look at our article!

Synonyms : Ground-truth